Study Reveals Wintertime Formation of Large Pollution Particles in China’s Skies

Nov 16, 2023 —

Beijing pollution (Photo Kevin Dooley, Creative Commons)

Previous studies have found that the particles that float in the haze over the skies of Beijing include sulfate, a major source of outdoor air pollution that damages lungs and aggravates existing asthmatic symptoms, according to the California Air Resources Board.

Sulfates usually are produced by atmospheric oxidation in the summer, when ample sunlight facilitates the oxidation that turns sulfur dioxide into dangerous aerosol particles. How is it that China can produce such extreme pollution loaded with sulfates in the winter, when there’s not as much sunlight and atmospheric oxidation is slow?

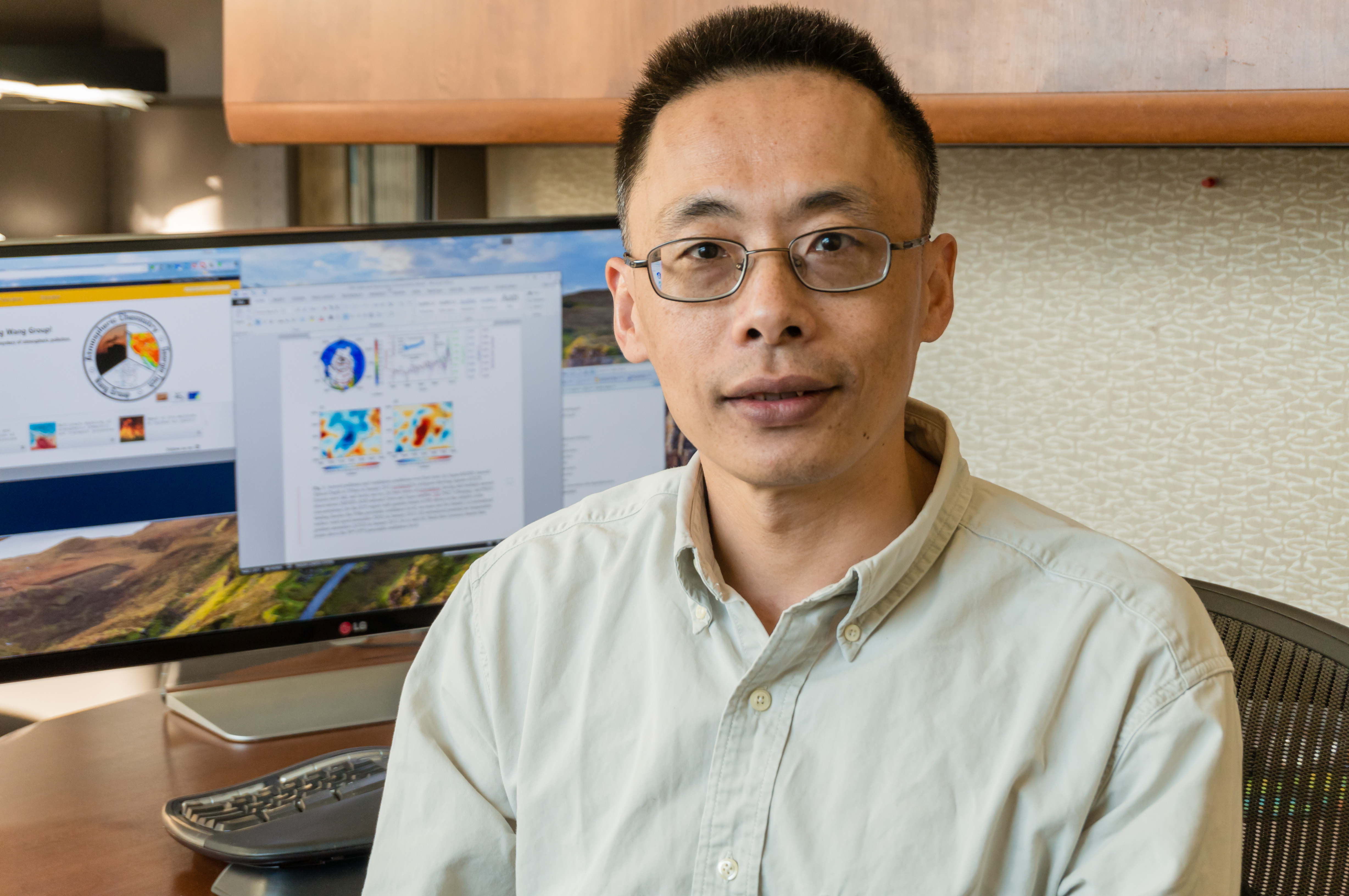

Yuhang Wang, professor in the School of Earth and Atmospheric Sciences at Georgia Tech, and his research team have conducted a study that may have the answer: All the chemical reactions needed to turn sulfur dioxide into sulfur trioxide, and then quickly into sulfate, primarily happen within the smoke plumes causing the pollution. That process not only creates sulfates in the winter in China, but it also happens faster and results in larger sulfate particles in the atmosphere.

“We call the source ‘in-source formation,’” Wang says. “Instead of having oxidants spread out in the atmosphere, slowly oxidizing sulfur dioxide into sulfur trioxide to produce sulfate, we have this concentrated production in the exhaust plumes that turns the sulfuric acid into large sulfate particles. And that's why we're seeing these large sulfate particles in China.”

The findings of in-source formation of larger wintertime sulfate particles in China could help scientists accurately assess the impacts of aerosols on radiative forcing — how climate change and global warming impact the Earth’s energy and heat balances — and on health, where larger aerosols means larger deposits into human lungs.

“Wintertime Formation of Large Sulfate Particles in China and Implications for Human Health,” is published in Environmental Science & Technology, an American Chemical Society publication. The co-authors include Qianru Zhang of Peking University and Mingming Zheng of Wuhan Polytechnic University, two of Wang’s former students who conducted the research while at Georgia Tech.

Explaining a historic smog

China still burns a lot of coal in power plants because its costs are lower compared to natural gas, Wang says. It also makes for an easy comparison between China’s hazy winters and a historic event that focused the United Kingdom’s attention on dangerous environmental hazards — the Great London Smog.

The event, depicted in the Netflix show “The Crown,” saw severe smog descend on London in December 1952. Unusually cold weather preceded the event, which brought the coal-produced haze down to ground level. UK officials later said the Great London Smog (also called the Great London Fog) was responsible for 4,000 deaths and 100,000 illnesses, although later studies estimated a higher death toll of 10,000 to 20,000.

“From the days of the London Fog to extreme winter pollution in China, it has been a challenge to explain how sulfate is produced in the winter,” Wang says.

Wang and his team decided to take on that challenge.

Aerosol size and heavy metal influence?

The higher sulfate levels in China, notably in January 2013, defy conventional explanations that relied on standard photochemical oxidation. It was thought that nitrogen dioxide or other mild oxidants found in alkaline or neutral particles in the atmosphere were the cause. But measurements revealed the resulting sulfate particles were highly acidic.

During Zheng’s time at Georgia Tech, “She was just looking for interesting things to do,” Wang says of the former student. “And I said, maybe this is what we should do — I wanted her to look at aerosol size distributions, how large the aerosols are.”

Zheng and Wang noticed that the size of the sulfate particles from China’s winter were much larger than those that resulted from photochemically-produced aerosols. Usually measuring 0.3 to 0.5 microns, the sulfate was closer to 1 micron in size. (A human hair is about 70 microns.) Aerosols distributed over a wider area would normally be smaller.

“The micron-sized aerosol observations imply that sulfate particles undergo substantial growth in a sulfur trioxide-rich environment,” Wang says. Larger particles increase the risks to human health.

“When aerosols are large, more is deposited in the front part of the respiratory system but less on the end part, such as alveoli,” he adds. “When accounting for the large size of particles, total aerosol deposition in the human respiratory system is estimated to increase by 10 to 30 percent.”

Something still needs to join the chemical mix, however, so the sulfur dioxide could turn into sulfur trioxide while enlarging the resulting sulfate particles. Wang says a potential pathway involves the catalytic oxidation of sulfur dioxide to sulfuric acid by “transition metals.”

High temperatures, acidity, and water content in the exhaust can greatly accelerate catalytic sulfur dioxide oxidation “compared to that in the ambient atmosphere. It is possible that similar heterogeneous processes occurring on the hot surface of a smokestack coated with transition metals could explain the significant portion of sulfur trioxide observed in coal-fired power plant exhaust,” Wang says.

“A significant amount of sulfur trioxide is produced, either during combustion or through metal-catalyzed oxidation at elevated temperatures.”

An opportunity for cleaner-burning coal power plants

The impact of in-source formation of sulfate suggests that taking measures to cool off and remove sulfur trioxide, sulfuric acid, and particulates from the emissions of coal-combustion facilities could be a way to cut down on pollution that can cause serious health problems.

“The development and implementation of such technology will benefit nations globally, particularly those heavily reliant on coal as a primary energy source,” Wang says.

DOI: https://doi.org/10.1021/acs.est.3c05645

Funding: This study was funded by the National Natural Science Foundation of China (nos. 41821005 and 41977311). Yuhang Wang was supported by the National Science Foundation Atmospheric Chemistry Program. Qianru Zhang would also like to thank the China Postdoctoral Science Foundation (2022M720005) and China Scholarship Council for support. Mingming Zheng is also supported by the Fundamental Research Funds for the Central Universities, Peking University (7100604309).

Yuhang Wang

Writer: Renay San Miguel

Communications Officer II/Science Writer

College of Sciences

404-894-5209

Editor: Jess Hunt-Ralston

Physicists Focus on Neutrinos With New Telescope

Nov 16, 2023 —

The Trinity Demonstrator telescope. (Photo Nepomuk Otte)

Georgia Tech scientists will soon have another way to search for neutrinos, those hard-to-detect, high-energy particles speeding through the cosmos that hold clues to massive particle accelerators in the universe — if researchers can find them.

“The detection of a neutrino source or even a single neutrino at the highest energies is like finding a holy grail,” says Professor Nepomuk Otte, the principal investigator for the Trinity Demonstrator telescope that was recently built by his group and collaborators, and was designed to detect neutrinos after they get stopped within the Earth.

The National Science Foundation (NSF)-funded effort will eventually create “the world’s most sensitive ultra-high energy neutrino telescope.” The Trinity Demonstrator is the first step toward an array of 18 telescopes located at three sites, each on top of a high mountain.

Earlier in the year, Otte’s group flew a neutrino telescope tethered to a massive NASA-funded balloon — though a leak brought the telescope down earlier than planned. The effort was part of the EUSO-SPB2 collaboration, which wants to study cosmic-particle accelerators with detectors in space.

“This was the first time our group had built an instrument for a balloon mission,” Otte says. “And the big question was if it would work at the boundary to space at -40F and in a vacuum. Even though we only flew 37 hours (of a 50-hour mission), we could show that our instrument worked as expected. We even accomplished some key measurements, like making a measurement of the background light, which no one has done before.”

The search for neutrinos

Otte is the second Georgia Tech physicist to lead a search for neutrinos. Professor Ignacio Taboada is the spokesperson for IceCube, an NSF neutrino observatory located at the South Pole. IceCube uses thousands of sensors buried in the ice to detect neutrinos.

Meanwhile, Trinity telescopes will be especially sensitive to higher-energy neutrinos. “With Trinity, we can potentially open a new, entirely unexplored window in astronomy,” Otte says. “IceCube gives us a couple of good pointers on what to observe. That is also why we modified the building of the Trinity Demonstrator to point toward the only two high-energy neutrino sources” already identified by IceCube scientists.

‘Cherenkov lights’ illuminate ‘air showers’

The Trinity Demonstrator telescope is not your typical astronomy telescope. Instead of looking into the sky, it is looking at the horizon, waiting for a flash of light to happen that only lasts tens of billionths of a second.

That flash is at the end of a chain of events that happens when a high-energy neutrino enters the Earth under a shallow angle. Upon penetrating Earth and traveling along a straight line for a hundred miles, the neutrino eventually interacts inside the Earth, producing a tau particle, which is like a short-lived massive electron.

The tau continues to travel through the Earth, and when it bounces out of the ground, it decays into millions of electrons and positrons, which zip through the air. Because the electrons and positrons travel faster than the speed of light in the air, they emit Cherenkov light, the short flash of light the Trinity Demonstrator telescope detects. Using computer algorithms, the recorded Cherenkov flashes are analyzed to reconstruct the energy and arrival direction of the neutrino.

Otte and his team of Georgia Tech postdoctoral and graduate scholars developed and built the Trinity Demonstrator. Undergraduate students have also had significant responsibilities in designing its optics. “It is good for the students because they are involved in all aspects of the experiment. In big collaborations, you are an expert on one aspect only,” Otte says.

The largest collaboration Otte is currently involved with is the Cherenkov Telescope Array, which involves more than 2,000 researchers. That planned international project will involve 60 next-generation gamma-ray telescopes in Chile and on the Canary Island of La Palma.

Next year, Otte says he and his researchers will apply for funding to build a much bigger telescope, which will be the foundation for the NSF 18-telescope array. For now, the team is busy observing with the Trinity Demonstrator atop Frisco Peak in Utah.

“With a bit of luck, we will detect the first neutrino source at these energies,” Otte said.

Funding: National Science Foundation (NSF)

The Trinity Demonstrator team, graduate scholar Jordan Bogdan, postdoctoral scholar Mariia Fedkevych, graduate scholar Sofia Stepanoff, and Professor Nepomuk Otte.

Writer: Renay San Miguel

Communications Officer II/Science Writer

College of Sciences

404-894-5209

Editor: Jess Hunt-Ralston

Water on the Moon May Be Forming Due to Electrons From Earth

Nov 09, 2023 —

Scientists have discovered that electrons from Earth may be contributing to the formation of water on the Moon’s surface. The research, published in Nature Astronomy, has the potential to impact our understanding of how water — a critical resource for life and sustained future human missions to Earth's moon — formed and continues to evolve on the lunar surface.

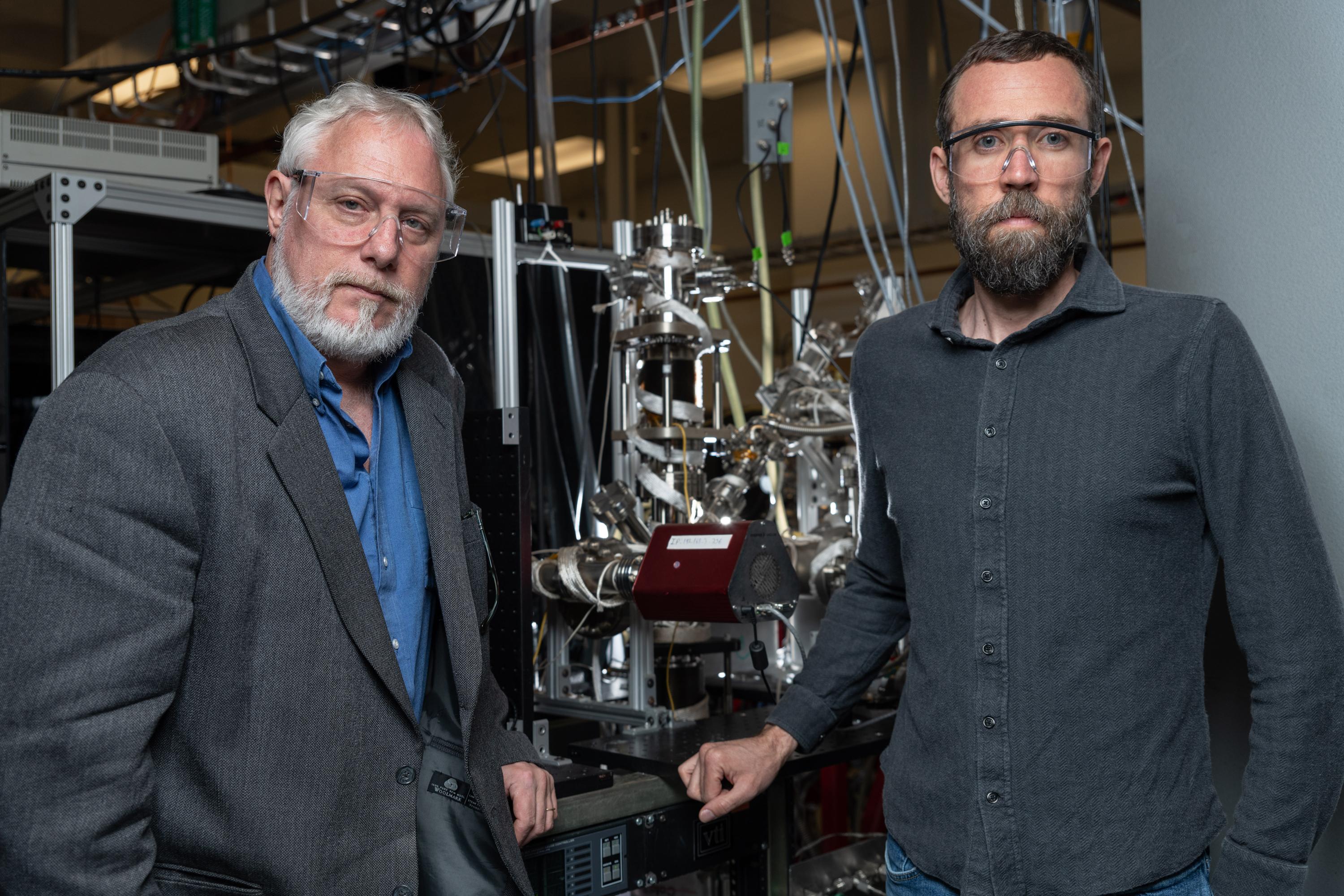

“Understanding how water is made on the Moon will help us understand how water was made in the early solar system and how water inevitably was brought to Earth,” says Thom Orlando, Regents' Professor in the School of Chemistry and Biochemistry with a joint appointment in the School of Physics, who played a critical role in the discovery alongside Brant Jones, a research scientist in the School of Chemistry and Biochemistry at Georgia Tech.

“Understanding water formation and transport on the Moon will help explain current and future observations,” Jones adds. “It can help predict areas with high concentrations of water that will help with mission planning and in situ resource utilization (ISRU) mining. This is absolutely necessary for sustained human presence on the Moon.”

The role of solar wind

“Water production on airless bodies is driven by a combination of solar wind, heat, ionizing radiation and meteorite impacts,” Jones explains. Solar wind — a continuous stream of protons and electrons emitted by the Sun, traveling at 250 to 500 miles per second — is widely thought to be one of the primary ways in which water has been formed on the Moon.

While solar wind buffets the Moon’s surface, Earth is protected due to its magnetosphere, a shield that forms as a result of the magnetic fields associated with the hot metals circulating in the Earth’s molten interior layers. However, solar wind is affected by the magnetosphere, forming the northern lights (aurora borealis) and southern lights (aurora australis) at Earth’s poles, and stretching the spherical shield into having a long “tail" — the magnetotail — which the Moon passes through periodically on its orbit around Earth. When the Moon is in the magnetotail region, it is temporarily shielded from the protons in solar wind, but still exposed to photons from the Sun.

"This provides a natural laboratory for studying the formation processes of lunar surface water," says University of Hawai‘i (UH) at Mānoa Assistant Researcher Shuai Li, who led the research study. "When the Moon is outside of the magnetotail, the lunar surface is bombarded with solar wind. Inside the magnetotail, there are almost no solar wind protons and water formation was expected to drop to nearly zero."

Surprisingly, while the Moon was within the magnetotail, and shielded from solar wind, the researchers found that the rate of water formation did not change. Since water was still forming in the absence of solar wind, the researchers began to theorize what could be responsible for forming the water.

Building on previous research, Orlando and Jones hypothesized that electrons from Earth could be responsible.

A work in progress

“This work was actually based, in part, on our previous studies examining the role of ionizing radiation in metal-oxide particles present in nuclear waste storage tanks,” Orlando explains, adding that in a previous project as part of an Energy Frontier Research Center called IDREAM, they showed that water forms when a mineral called boehmite is irradiated with electrons produced by energetic particles after radioactive decay.

While boehmite does not exist on the Moon’s surface, minerals with similar compositions are present, and Orlando and Jones theorized that, like the boehmite, irradiation from electrons might be producing water on lunar surface grains. “Despite the incredibly different physical environments,” Orlando says, “the chemistry and physics is likely very similar.”

The solar wind water cycle has the potential to make huge impacts on human discovery of the Moon and beyond. “While some of these water molecules will be destroyed by the Sun, some will eventually make it to the cold spots in permanently shadowed regions at higher latitudes,” Orlando says, in “the regions where some of the planned Artemis landings will be.” The next step? “Our hope is to prove that the hypothesis is correct!”

Orlando and Jones have been studying the role of solar wind on the in situ production of water on the Moon, Mercury, and other airless bodies as part of the NASA Solar System Exploration Research Virtual Institute (SSERVI) center called Radiation Effects on Volatile Exploration of Asteroids and Lunar Surfaces (REVEALS).

The realization that electrons from Earth were part of the dynamic lunar water cycle was a direct result of the interactions of several scientists with diverse backgrounds made possible by the SSERVI support. The work, which will further expand on the solar wind water cycle — including other sources of energy beyond surface temperature like meteorite and electron impacts — will continue in a new Georgia Tech-led SSERVI program, the Center for Lunar Environment and Volatile Exploration Research (CLEVER).

Written by Selena Langner

Editor: Jess Hunt-Ralston

Digging Into Greenland Ice: Unraveling Mysteries in Earth's Harshest Environments

Nov 06, 2023 —

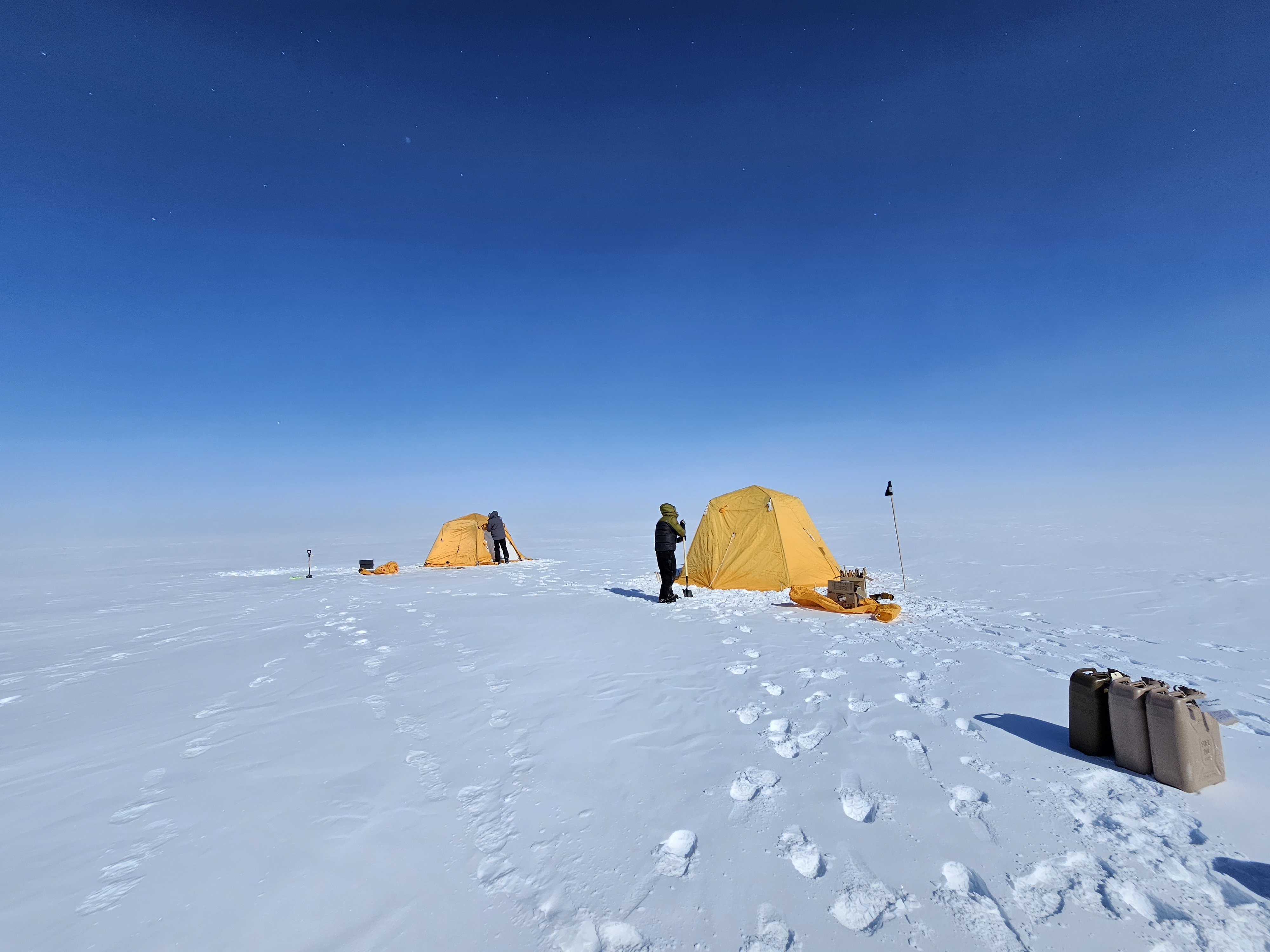

“You're in the middle of an ice sheet, and it’s one of the most desolate places on Earth. There are no living animals there. There are no plants there. The only animals you see are birds. They might be lost.”

That’s how Rachel Moore describes the view from the top of the Greenland ice sheet. “It's a really challenging environment, but it was really, really interesting to be there. I was there for nearly 50 days.”

Moore is an expert at collecting data in difficult research environments, traveling to some of the most extreme places on Earth in order to research microbes, and what hints they might give regarding astrobiology.

“It all started in grad school, when I joined a microbial ecology lab,” Moore recalls. “I pretty quickly learned that I love to do really difficult, challenging projects. I got interested in working around fire, biomass burning and forests, and I started collecting bacteria from the air. That was a challenge in and of itself, just trying to collect these really tiny things while standing in the smoke from the forest fires. But from that I learned that I loved to go out into the environment and collect things and try to understand everything around me.”

“I have a lot of different projects, but they all connect through astrobiology,” Moore says. “I’m interested in anything that hasn't been answered yet.” Moore is also leading a project called EXO Methane, which is investigating if different Archaea could survive in Martian and Enceladus-like environments. She’s also collaborating on a project that will send a probe to Venus next year.

Moore started her postdoctoral research at Georgia Tech, and is now continuing her work as a Research Scientist in the same laboratory. “The first project I started in this lab focused around how microbes can survive a really, really dry environment,” she adds. To study this, Moore traveled to the Atacama desert in Chile — the driest place on Earth, and also one of the best analogs to the surface of Mars. “What we were interested in there is how organisms survive intense radiation and intense desiccation. And how does that change as you look at different sites in the Atacama?”

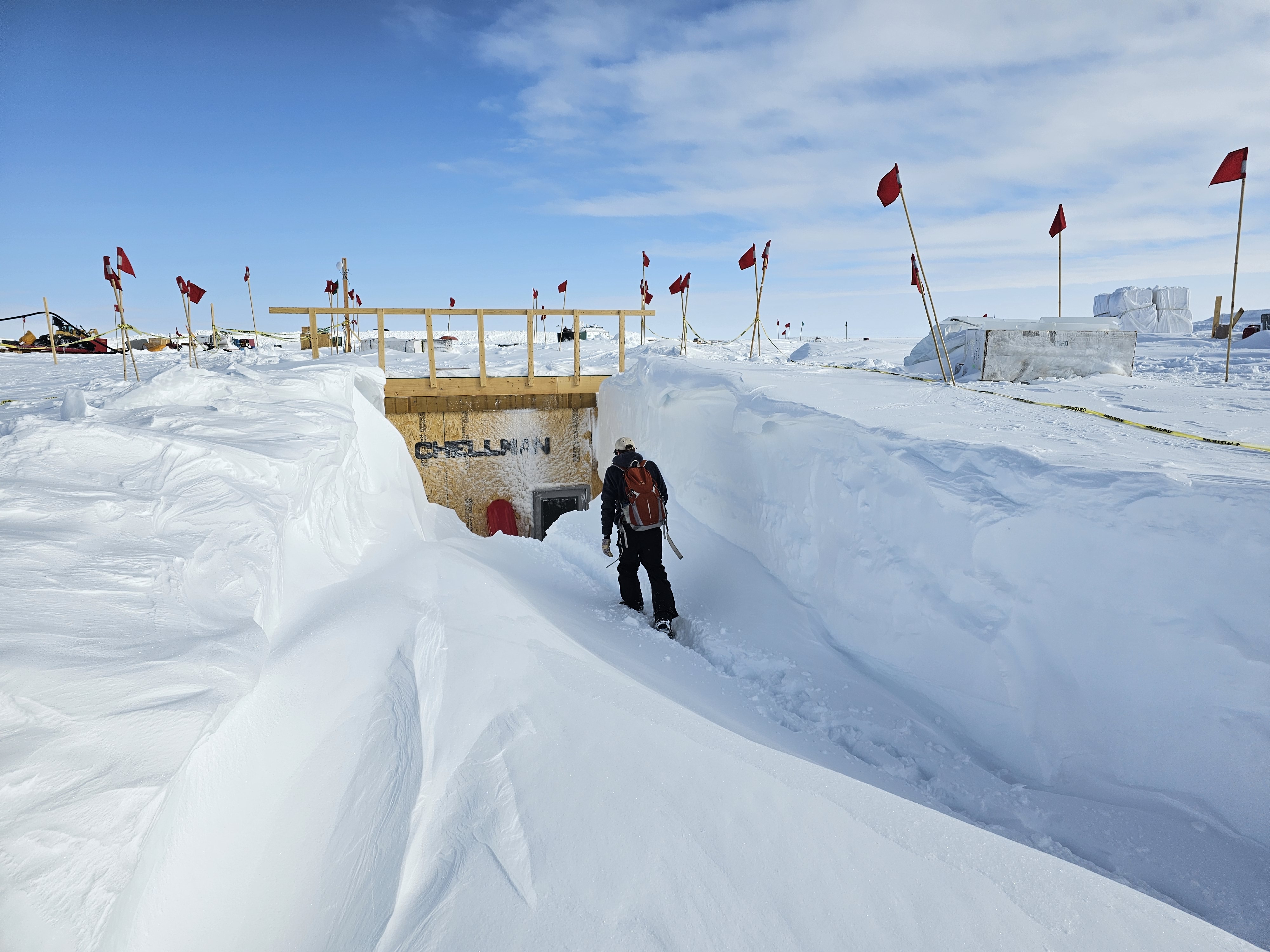

Then, this past summer, Moore traveled to another extreme environment — Greenland. “Instead of being hot and dry, Greenland is extremely cold and dry,” Moore explains. “So it was similar in some aspects, but completely different in terms of logistics and sampling methods. Because we were there in the summer, the sun never set. We were also at high elevation — 10,530 feet above sea level.”

Beneath the ice

The project was started by Nathan Chellman and Joe McConnell from the Desert Research Institute (DRI), and Moore’s role this year was to investigate the microbiology component of the research. “They had been seeing some anomalies in methane and carbon monoxide in ice samples,” Moore says. “We were curious if microbes might be producing some of this, either in the ice core after it’s been sampled, or while it’s still in the glacier.”

“The microbes would not be swimming around or anything” in the ice cores, Moore explains, “but it’s possible that their metabolism is still active, and they’re potentially able to make some of the gases, like methane, in this frozen environment. Our goal was to measure these things in the environment.”

Gathering samples wasn’t easy. “We set up a lab on the glacier, and we set it up in a trench to try to keep any of the ice cores that we pulled out roughly at the same temperature as the glacier itself,” Moore says. Because of that, “weather was a huge, huge thing. Anytime it would get stormy, the wind would blow all of the snow around, and it would fill the entrance to our trench. We had to dig ourselves out several times. People would put out flags so that you could see your way back to the main house or back to your dorms.”

The team hopes that this research will give a more defined record of the past from the Greenland ice sheet, improving climate change predictions. Moore also notes applications in astrobiology, adding that “there are a lot of icy worlds like Mars, Enceladus, and Europa, with either an icy crust over the ocean or glaciers on the northern and southern poles.”

Moore was also able to test new technology in the field, using a tool built by Georgia Tech undergraduates alongside her advisor Christopher Carr, assistant professor in the School of Earth and Atmospheric Sciences. An ice melter that can be used to take and clean ice samples, the tool is a miniaturized prototype that may be able to help take measurements on Mars, or in similar remote environments in the future.

“Being able to take a tool that Georgia Tech undergraduates made to Greenland and test it on 600-year-old ice in the field was a really cool experience,” Moore adds. “We brought Starlink with us, and so I was able to video call the undergraduate team while I was testing their tool, which was really special.”

The team is now lab-analyzing ice cores that they brought back from Greenland, unraveling which microbes might be present and potentially active. “It's really interesting to see: Is this all chemistry? Is it biology based? Or is there some intersection of the two?” Moore says. “Maybe there's some chemistry or photochemistry happening, plus some biology happening. Whatever it is, we'll have to wait and see.”

Written by Selena Langner

Editor: Jess Hunt-Ralston

AI/ML Conference Helps School of Physics Launch New Research Initiative

Nov 01, 2023 —

Physicists from around the country come to Georgia Tech for a recent machine learning conference. (Photo Benjamin Zhao)

The School of Physics’ new initiative to catalyze research using artificial intelligence (AI) and machine learning (ML) began October 16 with a conference at the Global Learning Center titled Revolutionizing Physics — Exploring Connections Between Physics and Machine Learning.

AI and ML have the spotlight right now in science, and the conference promises to be the first of many, says Feryal Özel, Professor and Chair of the School of Physics.

"We were delighted to host the AI/ML in Physics conference and see the exciting rapid developments in this field,” Özel says. “The conference was a prominent launching point for the new AI/ML initiative we are starting in the School of Physics."

That initiative includes hiring two tenure-track faculty members, who will benefit from substantial expertise and resources in artificial intelligence and machine learning that already exist in the Colleges of Sciences, Engineering, and Computing.

The conference attendees heard from colleagues about how the technologies were helping with research involving exoplanet searches, plasma physics experiments, and culling through terabytes of data. They also learned that a rough search of keyword titles by Andreas Berlind, director of the National Science Foundation’s Division of Astronomical Sciences, showed that about a fifth of all current NSF grant proposals include components around artificial intelligence and machine learning.

“That’s a lot,” Berlind told the audience. “It’s doubled in the last four years. It’s rapidly increasing.”

Berlind was one of three program officers from the NSF and NASA invited to the conference to give presentations on the funding landscape for AI/ML research in the physical sciences.

“It’s tool development, the oldest story in human history,” said Germano Iannacchione, director of the NSF’s Division of Materials Research, who added that AI/ML tools “help us navigate very complex spaces — to augment and enhance our reasoning capabilities, and our pattern recognition capabilities.”

That sentiment was echoed by Dimitrios Psaltis, School of Physics professor and a co-organizer of the conference.

“They usually say if you have a hammer, you see everything as a nail,” Psaltis said. “Just because we have a tool doesn't mean we're going to solve all the problems. So we're in the exploratory phase because we don't know yet which problems in physics machine learning will help us solve. Clearly it will help us solve some problems, because it's a brand new tool, and there are other instances when it will make zero contribution. And until we find out what those problems are, we're going to just explore everything.”

That means trying to find out if there is a place for the technologies in classical and modern physics, quantum mechanics, thermodynamics, optics, geophysics, cosmology, particle physics, and astrophysics, to name just a few branches of study.

Sanaz Vahidinia of NASA’s Astronomy and Astrophysics Research Grants told the attendees that her division was an early and enthusiastic adopter of AI and machine learning. She listed examples of the technologies assisting with gamma-ray astronomy and analyzing data from the Hubble and Kepler space telescopes. “AI and deep learning were very good at identifying patterns in Kepler data,” Vahidinia said.

Some of the physicist presentations at the conference showed pattern recognition capabilities and other features for AI and ML:

- Cassandra Hall, assistant professor of Computational Astrophysics at the University of Georgia, illustrated how machine learning helped in the search for hidden forming exoplanets.

- Christopher J. Rozell, Julian T. Hightower Chair and Professor in the School of Electrical and Computer Engineering, spoke of his experiments using “explainable AI” (AI that conveys in human terms how it reaches its decisions) to track depression recovery with deep brain stimulation.

- Paulo Alves, assistant professor of physics at UCLA College of Physical Sciences Space Institute, presented on AI/ML as tools of scientific discovery in plasma physics.

Alves’s presentation inspired another physicist attending the conference, Psaltis said. “One of our local colleagues, who's doing magnetic materials research, said, ‘Hey, I can apply the exact same thing in my field,’ which he had never thought about before. So we not only have cross-fertilization (of ideas) at the conference, but we’re also learning what works and what doesn't.”

More information on funding and grants at the National Science Foundation can be found here. Information on NASA grants is found here.

School of Physics Professor Tamara Bogdanovic prepares to ask a question at the recent machine learning conference at Georgia Tech. (Photo Benjamin Zhao)

Matthew Golden, graduate student researcher in the School of Physics, presents at a recent machine learning conference at Georgia Tech. (Photo Benjamin Zhao)

Writer: Renay San Miguel

Communications Officer II/Science Writer

College of Sciences

404-894-5209

Editor: Jess Hunt-Ralston

IDEaS Awards Grants and Cyberinfrastructure Resources for Thematic Programs and Research in AI

Oct 20, 2023 —

In keeping with a strong strategic focus on AI for the 2023-2024 Academic Year, the Institute for Data Engineering and Science (IDEaS) has announced the winners of its 2023 Seed Grants for Thematic Events in AI and Cyberinfrastructure Resource Grants to support research in AI requiring secure, high-performance computing capabilities. Thematic event awards recipients will receive $8K to support their proposed workshop or series and Cyberinfrastructure winners will receive research support consisting of 600,000 CPU hours on the AMD Genoa Server as well as 36,000 hours of NVIDIA DGX H-100 GPU server usage and 172 TB of secure storage.

Congratulations to the award winners listed below!

Thematic Events in AI Awards

Proposed Workshop: “Foundation of scientific AI (Artificial Intelligence) for Optimization of Complex Systems”

Primary PI: Raphael Pestourie, Assistant Professor, School of Computational Science and Engineering

Secondary PI: Peng Chen, Assistant Professor, School of Computational Science and Engineering

Proposed Series: “Guest Lecture Seminar Series on Generative Art and Music”

Primary PI: Gil Weinberg, Professor, School of Music

Cyber-Infrastructure Resource Awards

Title: Human-in-the-Loop Musical Audio Source Separation

Topics: Music Informatics, Machine Learning

Primary PI: Alexander Lerch, Associate Professor, School of Music

Co-PIs: Karn Watcharasupat, Music Informatics Group | Yiwei Ding, Music Informatics Group | Pavan Seshadri, Music Informatics Group

Title: Towards A Multi-Species, Multi-Region Foundation Model for Neuroscience

Topics: Data-Centric AI, Neuroscience

Primary PI: Eva Dyer, Assistant Professor, Biomedical Engineering

Title: Multi-point Optimization for Building Sustainable Deep Learning Infrastructure

Topics: Energy Efficient Computing, Deep Learning, AI Systems OPtimization

Primary PI: Divya Mahajan, Assistant Professor, School of Electrical and Computer Engineering, School of Computer Science

Title: Neutrons for Precision Tests of the Standard Model

Topics: Nuclear/Particle Physics, Computational Physics

Primary PI: Aaron Jezghani - OIT-PACE

Title: Continual Pretraining for Egocentric Video

Primary PI: : Zsolt Kira, Assistant Professor, School of Interactive Computing

Co-PI: Shaunak Halbe, Ph.D. Student, Machine Learning

Title: Training More Trustworthy LLMs for Scientific Discovery via Debating and Tool Use

Topics: Trustworthy AI, Large-Language Models, Multi-Agent Systems, AI Optimization

Primary PIs: Chao Zhang, School of Computational Science and Engineering & Bo Dai, College of Computing

Title: Scaling up Foundation AI-based Protein Function Prediction with IDEaS Cyberinfrastructure

Topics: AI, Biology

Primary PI: Yunan Luo, Assistant Professor, School of Computational Science and Engineering

- Christa M. Ernst

Christa M. Ernst - Research Communications Program Manager

Robotics | Data Engineering | Neuroengineering

IDEaS Awards 2023 Seed Grants to Seven Interdisciplinary Research Teams

Oct 20, 2023 —

The teams awarded will focus on strategic new initiatives in Artificial Intelligence.

The Institute for Data Engineering and Science, in conjunction with several Interdisciplinary Research Institutes (IRIs) at Georgia Tech, have awarded seven teams of researchers from across the Institute a total of $105,000 in seed funding geared to better position Georgia Tech to perform world-class interdisciplinary research in data science and artificial intelligence development and deployment.

The goals of the funded proposals include identifying prominent emerging research directions on the topic of AI, shaping IDEaS future strategy in the initiative area, building an inclusive and active community of Georgia Tech researchers in the field that potentially include external collaborators, and identifying and preparing groundwork for competing in large-scale grant opportunities in AI and its use in other research fields.

Below are the 2023 recipients and the co-sponsoring IRIs:

Proposal Title: "AI for Chemical and Materials Discovery" + “AI in Microscopy Thrust”

PI: Victor Fung, CSE | Vida Jamali, ChBE| Pan Li, ECE | Amirali Aghazadeh Mohandesi, ECE

Award: $20k (co-sponsored by IMat)

Overview: The goal of this initiative is to bring together expertise in machine learning/AI, high-throughput computing, computational chemistry, and experimental materials synthesis and characterization to accelerate material discovery. Computational chemistry and materials simulations are critical for developing new materials and understanding their behavior and performance, as well as aiding in experimental synthesis and characterization. Machine learning and AI play a pivotal role in accelerating material discovery through data-driven surrogate models, as well as high-throughput and automated synthesis and characterization.

Proposal Title: " AI + Quantum Materials”

PI: Zhigang JIang, Physics | Martin Mourigal, Physics

Award: $20k (Co-Sponsored by IMat)

Overview: Zhigang Jiang is currently leading an initiative within IMAT entitled “Quantum responses of topological and magnetic matter” to nurture multi-PI projects. By crosscutting the IMAT initiative with this IDEAS call, we propose to support and feature the applications of AI on predictive and inverse problems in quantum materials. Understanding the limit and capabilities of AI methodologies is a huge barrier of entry for Physics students, because researchers in that field already need heavy training in quantum mechanics, low-temperature physics and chemical synthesis. Our most pressing need is for our AI inclined quantum materials students to find a broader community to engage with and learn. This is the primary problem we aim to solve with this initiative.

PI: Jeffrey Skolnick, Bio Sci | Chao Zhang, CSE

Proposal Title: Harnessing Large Language Models for Targeted and Effective Small Molecule 4 Library Design in Challenging Disease Treatment

Award: $15k (co-sponsored by IBB)

Overview: Our objective is to use large language models (LLMs) in conjunction with AI algorithms to identify effective driver proteins, develop screening algorithms that target appropriate binding sites while avoiding deleterious ones, and consider bioavailability and drug resistance factors. LLMs can rapidly analyze vast amounts of information from literature and bioinformatics tools, generating hypotheses and suggesting molecular modifications. By bridging multiple disciplines such as biology, chemistry, and pharmacology, LLMs can provide valuable insights from diverse sources, assisting researchers in making informed decisions. Our aim is to establish a first-in-class, LLM driven research initiative at Georgia Tech that focuses on designing highly effective small molecule libraries to treat challenging diseases. This initiative will go beyond existing AI approaches to molecule generation, which often only consider simple properties like hydrogen bonding or rely on a limited set of proteins to train the LLM and therefore lack generalizability. As a result, this initiative is expected to consistently produce safe and effective disease-specific molecules.

PI: Yiyi He, School of City & Regional Plan | Jun Rentschler, World Bank

Proposal Title: “AI for Climate Resilient Energy Systems”

Award: $15k (co-sponsored by SEI)

Overview: We are committed to building a team of interdisciplinary & transdisciplinary researchers and practitioners with a shared goal: developing a new framework which model future climatic variations and the interconnected and interdependent energy infrastructure network as complex systems. To achieve this, we will harness the power of cutting-edge climate model outputs, sourced from the Coupled Model Intercomparison Project (CMIP), and integrate approaches from Machine Learning and Deep Learning models. This strategic amalgamation of data and techniques will enable us to gain profound insights into the intricate web of future climate-change-induced extreme weather conditions and their immediate and long-term ramifications on energy infrastructure networks. The seed grant from IDEaS stands as the crucial catalyst for kick-starting this ambitious endeavor. It will empower us to form a collaborative and inclusive community of GT researchers hailing from various domains, including City and Regional Planning, Earth and Atmospheric Science, Computer Science and Electrical Engineering, Civil and Environmental Engineering etc. By drawing upon the wealth of expertise and perspectives from these diverse fields, we aim to foster an environment where innovative ideas and solutions can flourish. In addition to our internal team, we also have plans to collaborate with external partners, including the World Bank, the Stanford Doerr School of Sustainability, and the Berkeley AI Research Initiative, who share our vision of addressing the complex challenges at the intersection of climate and energy infrastructure.

PI: Jian Luo, Civil & Environmental Eng | Yi Deng, EAS

Proposal Title: “Physics-informed Deep Learning for Real-time Forecasting of Urban Flooding”

Award: $15k (co-sponsored by BBISS)

Overview: Our research team envisions a significant trend in the exploration of AI applications for urban flooding hazard forecasting. Georgia Tech possesses a wealth of interdisciplinary expertise, positioning us to make a pioneering contribution to this burgeoning field. We aim to harness the combined strengths of Georgia Tech's experts in civil and environmental engineering, atmospheric and climate science, and data science to chart new territory in this emerging trend. Furthermore, we envision the potential extension of our research efforts towards the development of a real-time hazard forecasting application. This application would incorporate adaptation and mitigation strategies in collaboration with local government agencies, emergency management departments, and researchers in computer engineering and social science studies. Such a holistic approach would address the multifaceted challenges posed by urban flooding. To the best of our knowledge, Georgia Tech currently lacks a dedicated team focused on the fusion of AI and climate/flood research, making this initiative even more pioneering and impactful.

Proposal Title: “AI for Recycling and Circular Economy”

PI: Valerie Thomas, ISyE and PubPoly | Steven Balakirsky, GTRI

Award: $15k (co-sponsored by BBISS)

Overview: Most asset management and recycling-use technology has not changed for decades. The use of bar codes and RFID has provided some benefits, such as for retail returns management. Automated sorting of recyclables using magnets, eddy currents, and laser plastics identification has improved municipal recycling. Yet the overall field has been challenged by not-quite-easy-enough identification of products in use or at end of life. AI approaches, including computer vision, data fusion, and machine learning provide the additional capability to make asset management and product recycling easy enough to be nearly autonomous. Georgia Tech is well suited to lead in the development of this application. With its strength in machine learning, robotics, sustainable business, supply chains and logistics, and technology commercialization, Georgia Tech has the multi-disciplinary capability to make this concept a reality; in research and in commercial application.

Proposal Title: “Data-Driven Platform for Transforming Subjective Assessment into Objective Processes for Artistic Human Performance and Wellness”

PI: Milka Trajkova, Research Scientist/School of Literature, Media, Communication | Brian Magerko, School of Literature, Media, Communication

Award: $15k (co-sponsored by IPaT)

Overview: Artistic human movement at large, stands at the precipice of a data-driven renaissance. By leveraging novel tools, we can usher in a transparent, data-driven, and accessible training environment. The potential ramifications extend beyond dance. As sports analytics have reshaped our understanding of athletic prowess, a similar approach to dance could redefine our comprehension of human movement, with implications spanning healthcare, construction, rehabilitation, and active aging. Georgia Tech, with its prowess in AI, HCI, and biomechanics is primed to lead this exploration. To actualize this vision, we propose the following research questions with ballet as a prime example of one of the most complex types of artistic movements: 1) What kinds of data - real-time kinematic, kinetic, biomechanical, etc. captured through accessible off-the-shelf technologies, are essential for effective AI assessment in ballet education for young adults?; 2) How can we design and develop an end-to-end ML architecture that assesses artistic and technical performance?; 3) What feedback elements (combination of timing, communication mode, feedback nature, polarity, visualization) are most effective for AI- based dance assessment?; and 4) How does AI-assisted feedback enhance physical wellness, artistic performance, and the learning process in young athletes compared to traditional methods?

- Christa M. Ernst

Christa M. Ernst | Research Communications Program Manager

Robotics | Data Engineering | Neuroengineering

christa.ernst@research.gatech.edu

Three Earth and Atmospheric Sciences Researchers Awarded DOE Earthshot Funding for Carbon Removal Strategies

Oct 19, 2023 — Atlanta, GA

Earth (Credit NASA/Joshua Stevens)

Three Georgia Tech School of Earth and Atmospheric Sciences researchers — Professor and Associate Chair Annalisa Bracco, Professor Taka Ito, and Georgia Power Chair and Associate Professor Chris Reinhard — will join colleagues from Princeton, Texas A&M, and Yale University for an $8 million Department of Energy (DOE) grant that will build an “end-to-end framework” for studying the impact of carbon dioxide removal efforts for land, rivers, and seas.

The proposal is one of 29 DOE Energy Earthshot Initiatives projects recently granted funding, and among several led by and involving Georgia Tech investigators across the Sciences and Engineering.

Overall, DOE is investing $264 million to develop solutions for the scientific challenges underlying the Energy Earthshot goals. The 29 projects also include establishing 11 Energy Earthshot Research Centers led by DOE National Laboratories.

The Energy Earthshots connect the Department of Energy's basic science and energy technology offices to accelerate breakthroughs towards more abundant, affordable, and reliable clean energy solutions — seeking to revolutionize many sectors across the U.S., and relying on fundamental science and innovative technology to be successful.

Carbon Dioxide Removal

The School of Earth and Atmospheric Sciences project, “Carbon Dioxide Removal and High-Performance Computing: Planetary Boundaries of Earth Shots,” is part of the agency’s Science Foundations for the Energy Earthshots program. Its goal is to create a publicly-accessible computer modeling system that will track progress in two key carbon dioxide removal (CDR) processes: enhanced earth weathering, and global ocean alkalinization.

In enhanced earth weathering, carbon dioxide is converted into bicarbonate by spreading minerals like basalt on land, which traps rainwater containing CO2. That gets washed out by rivers into oceans, where it is trapped on the ocean floor. If used at scale, these nature-based climate solutions could remove atmospheric carbon dioxide and alleviate ocean acidification.

The research team notes that there is currently “no end-to-end framework to assess the impacts of enhanced weathering or ocean alkalinity enhancement — which are likely to be pursued at the same time.”

“The proposal is for a three-year effort, but our hope is that the foundation we lay down in that time will represent a major step forward in our ability to track carbon from land to sea,” says Reinhard, the Georgia Power Chair who is a co-investigator on the grant.

“Like many folks interested in better understanding how climate interventions might impact the Earth system across scales, we are in some ways building the plane in midair,” he adds. “We need to develop and validate the individual pieces of the system — soils, rivers, the coastal ocean — but also wire them up and prove from observations on the ground how a fully integrated model works.”

That will involve the use of several existing computer models, along with Georgia Tech’s PACE supercomputers, Professor Ito explains. “We will use these models as a tool to better understand how the added alkalinity, carbon and weathering byproducts from the soils and rivers will eventually affect the cycling of nutrients, alkalinity, carbon and associated ecological processes in the ocean,” Ito adds. “After the model passes the quality check and we have confidence in our output, we can start to ask many questions about assessment of different carbon sequestration approaches or downstream impacts on ecosystem processes.”

Professor Bracco, whose recent research has focused on rising ocean heat levels, says CDR is needed just to keep ocean systems from warming about 2 degrees centigrade (Celsius).

“Ninety percent of the excess heat caused by greenhouse gas emissions is in the oceans,” Bracco shares, “and even if we stop emitting all together tomorrow, that change we imprinted will continue to impact the climate system for many hundreds of years to come. So in terms of ocean heat, CDRs will help in not making the problem worse, but we will not see an immediate cooling effect on ocean temperatures. Stabilizing them, however, would be very important.”

Bracco and co-investigators will study the soil-river-ocean enhanced weathering pipeline “because it’s definitely cheaper and closer to scale-up.” Reverse weathering can also happen on the ocean floor, with new clays chemically formed from ocean and marine sediments, and CO2 is included in that process. “The cost, however, is higher at the moment. Anything that has to be done in the ocean requires ships and oil to begin,” she adds.

Reinhard hopes any tools developed for the DOE project would be used by farmers and other land managers to make informed decisions on how and when to manage their soil, while giving them data on the downstream impacts of those practices.

“One of our key goals will also be to combine our data from our model pipeline with historical observational data from the Mississippi watershed and the Gulf of Mexico,” Reinhard says. “This will give us some powerful new insights into the impacts large-scale agriculture in the U.S. has had over the last half-century, and will hopefully allow us to accurately predict how business-as-usual practices and modified approaches will play out across scales.”

(From left) Annalisa Bracco, Taka Ito, Chris Reinhard

Writer: Renay San Miguel

Communications Officer II/Science Writer

College of Sciences

404-894-5209

Editor: Jess Hunt-Ralston

Physicists Solve Mysteries of Microtubule Movers

Aug 31, 2023 —

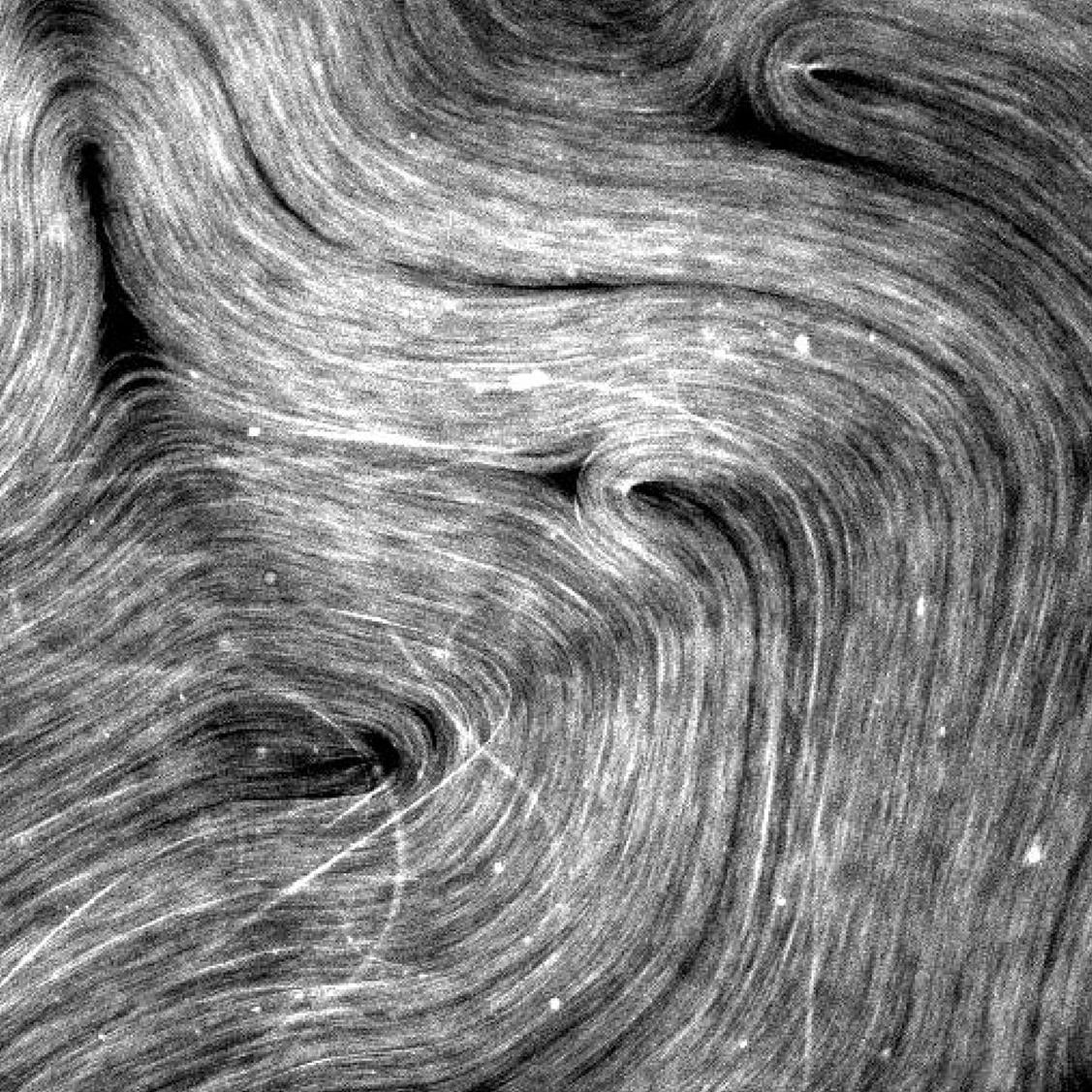

Three noticeable out-of-plane microtubule bundles are misaligned with the rest of the microtubules at the bottom left of the image.

Active matter is any collection of materials or systems composed of individual units that can move on their own, thanks to self-propulsion or autonomous motion. They can be of any size — think clouds of bacteria in a petri dish, or schools of fish.

Roman Grigoriev is mostly interested in the emergent behaviors in active matter systems made up of units on a molecular scale — tiny systems that convert stored energy into directed motion, consuming energy as they move and exert mechanical force.

“Active matter systems have garnered significant attention in physics, biology, and materials science due to their unique properties and potential applications,” Grigoriev, a professor in the School of Physics at Georgia Tech, explains.

“Researchers are exploring how active matter can be harnessed for tasks like designing new materials with tailored properties, understanding the behavior of biological organisms, and even developing new approaches to robotics and autonomous systems,” he says.

But that’s only possible if scientists learn how the microscopic units making up active matter interact, and whether they can affect these interactions and thereby the collective properties of active matter on the macroscopic scale.

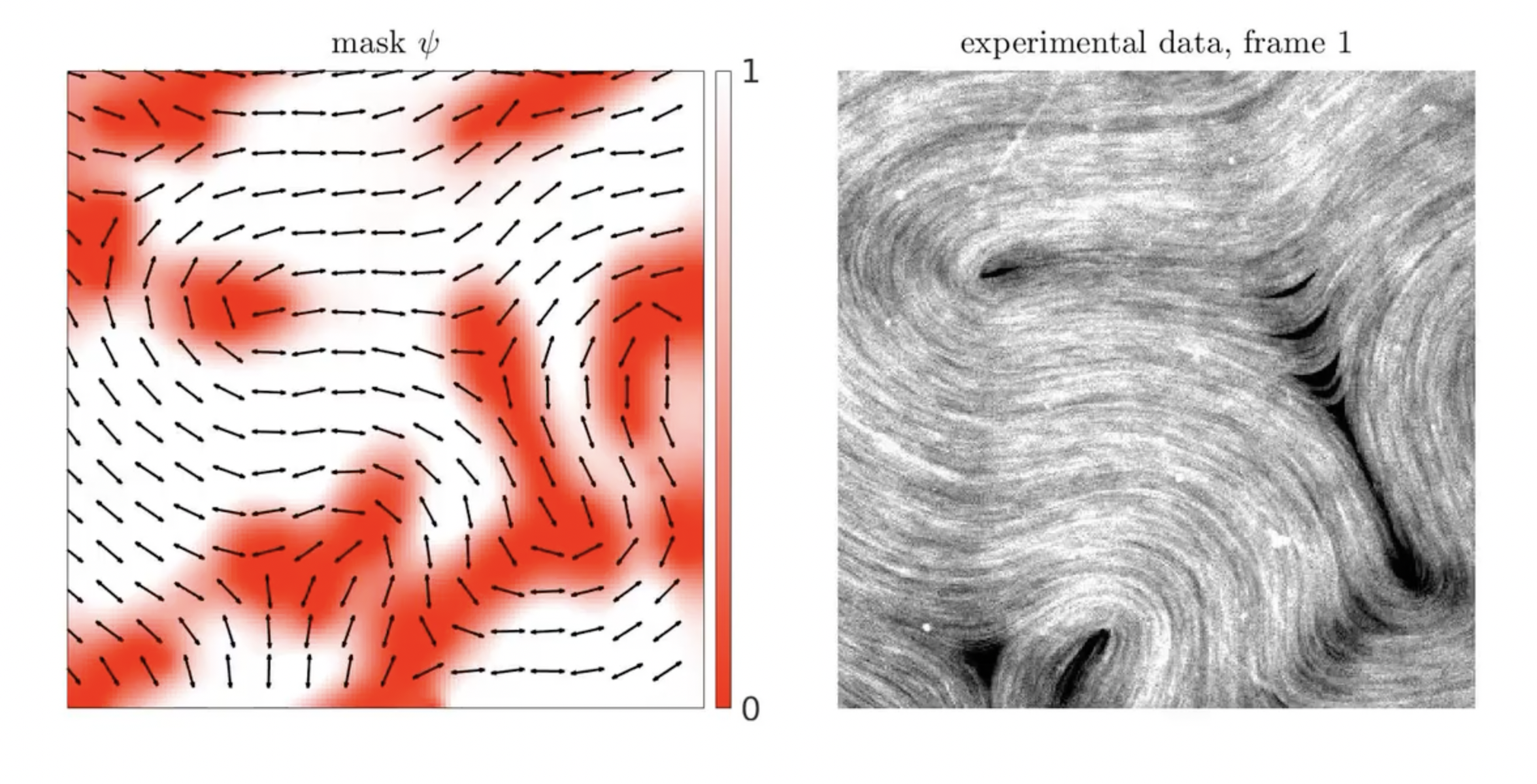

Grigoriev and his research colleagues have found a potential first step by developing a new model of active matter that generated new insight into the physics of the problem. They detail their methods and results in a new study published in Science Advances, “Physically informed data-driven modeling of active nematics.”

Grigoriev’s co-authors include School of Physics graduate researchers Matthew Golden and Jyothishraj Nambisan, as well as Alberto Fernandez-Nieves, professor in the Department of Condensed Matter Physics at the University of Barcelona and a former associate professor of Physics at Georgia Tech.

A two-dimensional 'solution?'

The research team focused on one of the most common examples of active matter, a suspension of self-propelled particles, such as bacteria or synthetic microswimmers, in a liquid medium. These particles cluster, swarm, and otherwise form dynamic patterns due to their ability to move and interact with each other.

“In our paper, we use data from an experimental system involving suspensions of microtubules, which provide structural support, shape, and organization to eukaryotic cells (any cell with a clearly defined nucleus),” Grigoriev explains.

Microtubules, as well as actin filaments and some bacteria, are examples of nematics, rod-like objects whose "heads" are indistinguishable from their "tails.”

The motion of microtubules is driven by molecular motors powered by a protein, kinesin, which consumes adenosine triphosphate (ATP) dissolved in the liquid to slide a pair of neighboring microtubules past one another. The researcher’s system used microtubules suspended between layers of oil and water, which restricted their movement to two dimensions.

“That makes it easier to visualize the microtubules and track their motion. By changing the kinesin or ATP concentrations, we could control the motion of the microtubules, making this experimental setup by far one of the most popular in the study of active nematics and even more generally, active matter,” Grigoriev said.

‘This is where the story gets interesting’

Getting a clearer picture of microtubular movements was just one discovery in the study.

Another was learning more about the relationships between the characteristic patterns describing the orientation and motion of nematic molecules on a macroscopic scale. Those patterns, or topological defects, determine how the nematics orient themselves at the oil-water interface, that is in two spatial dimensions.

“Understanding the relationship between the flow — the global property of the system, or the fluid — and the topological defects, which describe the local orientation of microtubules, is one of the key intellectual questions facing researchers in the field,” Grigoriev said. “One needs to correctly identify the dominant physical effects which control the interaction between the microtubules and the surrounding fluid.”

“And this is where the story gets interesting,” Grigoriev adds. “For over a decade, it was believed that the key physics were well understood, with a large number of theoretical and computational studies relying on a generally accepted first principles model” — that is, one based on established science — “that was originally derived for active nematics in three spatial dimensions.”

In the Georgia Tech model, though, the dynamics of active nematics — more specifically, the length and time scales of the emerging patterns — are controlled by a pair of physical constants describing those assumed dominant physical effects: the stiffness of the microtubules (their flexibility), and the activity describing the stress, or force, generated by the kinesin motors.

“Using a data-driven approach, we inferred the correct form of the model demonstrating that, for two-dimensional active nematics, the dominant physical effects are different from what was previously assumed,” Grigoriev says. “In particular, the time scale is set by the rate at which bundles of microtubules are stretched by kinesin.” It is this rate, rather than the stress, that is constant.

The danger of confirmation bias

Grigoriev said the results of the study have important implications for understanding of active nematics and their emergent behaviors, explaining that they help rationalize a number of recent experimental results that were previously unexplained, such as how the density of topological defects scales with the concentration of kinesin and the viscosity of the fluid layers.

“More importantly, our results demonstrate the danger associated with traditional assumptions that established research communities often land on and have difficulty overcoming,” Grigoriev said. “While data-driven methods may have their own sources of bias, they offer a perspective which is different enough from more traditional approaches to become a valuable research tool in their own right.”

About Georgia Institute of Technology

The Georgia Institute of Technology, or Georgia Tech, is one of the top public research universities in the U.S., developing leaders who advance technology and improve the human condition. The Institute offers business, computing, design, engineering, liberal arts, and sciences degrees. Its more than 45,000 undergraduate and graduate students, representing 50 states and more than 148 countries, study at the main campus in Atlanta, at campuses in France and China, and through distance and online learning. As a leading technological university, Georgia Tech is an engine of economic development for Georgia, the Southeast, and the nation, conducting more than $1 billion in research annually for government, industry, and society.

Funding: This study was funded by the National Science Foundation, grant no. CMMI-2028454. “Physically informed data-driven modeling of active nematics,” DOI: 10.1126/sciadv.abq6120

Left, a graphic showing microtubules orienting themselves in the experiment. Right, a still from a video showing microtubules moving at the interface of oil and water. Graphic by Roman Grigoriev

Roman Grigoriev

Writer: Renay San Miguel

Communications Officer II/Science Writer

College of Sciences

404-894-5209

Editor: Jess Hunt-Ralston

NSF RAPID Response to Earthquakes in Turkey

Jul 26, 2023 —

In February, a major earthquake event devastated the south-central region of the Republic of Türkiye (Turkey) and northwestern Syria. Two earthquakes, one magnitude 7.8 and one magnitude 7.5, occurred nine hours apart, centered near the heavily populated city of Gaziantep. The total rupture lengths of both events were up to 250 miles. The president of Turkey has called it the “disaster of the century,” and the threat is still not over — aftershocks could still affect the region.

Now, Zhigang Peng, a professor in the School of Earth and Atmospheric Sciences at Georgia Tech and graduate students Phuc Mach and Chang Ding, alongside researchers at the Scientific and Technological Research Institution of Türkiye (TÜBİTAK) and researchers at the University of Missouri, are using small seismic sensors to better understand just how, why, and when these earthquakes are occurring.

Funded by an NSF RAPID grant, the project is unique in that it aims to actively respond to the crisis while it’s still happening. National Science Foundation (NSF) Rapid Response Research (RAPID) grants are used when there is a severe urgency with regard to availability of or access to data, facilities or specialized equipment, including quick-response research on natural or anthropogenic disasters and other similar unanticipated events.

In an effort to better map the aftershocks of the earthquake event — which can occur weeks or months after the main event — the team placed approximately 120 small sensors, called nodes, in the East Anatolian fault region this past May. Their deployment continues through the summer.

It’s the first time sensors like this have been deployed in Turkey, says Peng.

“These sensors are unique in that they can be placed easily and efficiently," he explains. "With internal batteries that can work up to one month when fully charged, they’re buried in the ground and can be deployed within minutes, while most other seismic sensors need solar panels or other power sources and take much longer time and space to deploy.” Each node is about the size of a 2-liter soda bottle, and can measure ground movement in three directions.

“The primary reason we’re deploying these sensors quickly following the two mainshocks is to study the physical mechanisms of how earthquakes trigger each,” Peng adds. Mainshocks are the largest earthquake in a sequence. “We’ll use advanced techniques such as machine learning to detect and locate thousands of small aftershocks recorded by this network. These newly identified events can provide new important clues on how aftershocks evolve in space and time, and what drives foreshocks that occur before large events.”

Unearthing fault mechanisms

The team will also use the detected aftershocks to illuminate active faults where three tectonic plates come together — a region known as the Maraş Triple Junction. “We plan to use the aftershock locations and the seismic waves from recorded events to image subsurface structures where large damaging earthquakes occur,” says Mach, the Georgia Tech graduate researcher. This will help scientists better understand why sometimes faults ‘creep’ without any large events, while in other cases faults lock and then violently release elastic energy, creating powerful earthquakes.

Getting high-resolution data of the fault structures is another priority. “The fault line ruptured in the first magnitude 7.8 event has a bend in it, where earthquake activity typically terminates, but the earthquake rupture moved through this bend, which is highly unusual,” Peng says. By deploying additional ultra-dense arrays of sensors in their upcoming trip this summer, the team hopes to help researchers ‘see’ the bend under the Earth’s surface, allowing them to better understand how fault properties control earthquake rupture propagation.

The team also aims to learn more about the relationship between the two main shocks that recently rocked Turkey, sometimes called doublet events. Doublet events can happen when the initial earthquake triggers a secondary earthquake by adding extra stress loading. While in this instance, the doublet may have taken place only 9 hours after the initial event, these secondary earthquakes have been known to take place days, months, or even years after the initial one — a famous example being the sequence of earthquakes that spanned 60 years in the North Anatolian fault region in Northern Turkey.

“Clearly the two main shocks in 2023 are related, but it is still not clear how to explain the time delays,” says Peng. The team plans to work with their collaborators at TÜBİTAK to re-analyze seismic and other types of geophysical data right before and after those two main shocks in order to better understand the triggering mechanisms.

“In our most recent trip in southern Türkiye, we saw numerous buildings that were partially damaged during the mainshock, and many people will have to live in temporary shelters for years during the rebuilding process,” Peng adds. “While we cannot stop earthquakes from happening in tectonically active regions, we hope that our seismic deployment and subsequent research on earthquake triggering and fault imaging can improve our ability to predict what will happen next — before and after a big one — and could save countless lives.”

Written By:

Selena Langner

Media Contact:

Jess Hunt-Ralston